Visit our 2018 Midterms page for Brookings experts’ research and analysis related to the upcoming midterm elections, and subscribe to the Brookings Creative Lab YouTube channel to stay up to date on our latest videos.

As technological capabilities progress, the threat of political warfare is becoming an even more serious threat to democratic elections. David M. Rubenstein Fellow Alina Polyakova analyzes past disinformation campaigns and political warfare tools employed by hostile foreign actors in Russia and elsewhere. She also discusses how these tactics are influencing U.S. midterm and other elections and what the U.S. can do to protect its electoral system.

What you need to know:

- One of the goals of Russian information warfare is to create a society in which we can’t tell the difference between fact and fiction.

- The Russian government is becoming more sophisticated in mastering the tools of political warfare for the digital age. This includes the use of bots, trolls, microtargeting to spread disinformation.

- The strategies are not new but the digital tools are.

- Over the next few months we are going to see more disinformation campaigns, including fake websites that work together as a network to spread disinformation, fake personalities and entities on Twitter and Facebook, and manipulation of social media networks’ algorithms, including Google, YouTube, and others. And we’re not really paying enough attention to algorithmic manipulation.

- The more frightening development that we are likely to see in the next 12-16 months is the use of artificial intelligence to enhance the tools of political warfare.

- Right now, humans control and produce online entities like bots and trolls. But soon, disinformation campaigns will become more automated, smarter, and more difficult to detect. AI driven disinformation will be better targeted to specific audiences; AI driven online entities will be able to predict and manipulate human responses; At some point very soon, we won’t be able to tell the difference between automated accounts and human entities.

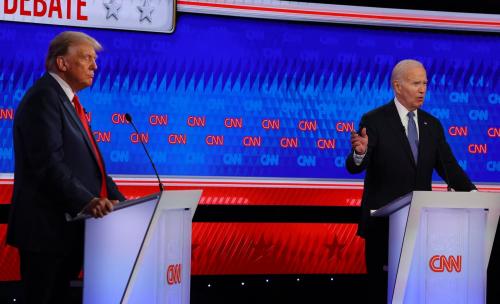

- The phenomena of deep fakes—fake video and audio that appears convincingly real – is going to be used by malicious actors to mislead and deceive us. Debunking this content will be like playing whack-a-mole. This is going to become a reality much sooner than we’re comfortable with.

- Democratic societies, including the United States, can do many things to inoculate themselves against these kinds of tools of political warfare, disinformation, and cyberattacks.

- Step one is getting the U.S. government to develop a strategy of deterrence when it comes to political warfare.

- We currently don’t have such a strategy, because we diluted or dissolved the institutions and capabilities we had during the Cold War.

- Step two is acknowledging individuals’ responsibility to be more critical consumers of information and recognizing that the information we consume is not neutral but often manipulated by malicious actors. As citizens, we have a responsibility to be more discerning and aware.

Commentary

What do Russian disinformation campaigns look like, and how can we protect our elections?

October 3, 2018