Teach For America, the alternative teaching certification program that places highly selected corps members into high-need schools for two years, has had a string of bad news lately. They’ve run into problems recruiting corps members in the post-recession economy. A new book of counter-narratives just came out written by disenchanted TFAers (referring to both active corps members and alumni of TFA).

And, perhaps the most challenging of all, Mathematica’s recent evaluation of TFAers’ classroom effectiveness in elementary grades as the program scaled up in size in 2010, facilitated with funds from the U.S. Department of Education, found no difference in classroom performance. This headline result ran counter to several earlier studies that showed TFAers generally outperform comparison teachers in math (and science).

With this apparent reversal of fortune, one may be tempted to ask whether TFA is losing its luster with age. Yet, a closer examination of this and other recent studies on TFA suggests such a conclusion may be too rash.

First, a closer look at the Mathematica study’s findings show the main finding of “no significant difference” is not as dire as it may appear. When looking specifically at the sub-sample comprised of Pre-K through 2nd grade teachers, a surprisingly large positive and significant TFA effect was estimated in reading. Also of note is that while the evaluation did use random assignment, comparison teachers in the Mathematica study had nearly 14 years of prior teaching experience, on average, compared to the predominantly novice TFAers. The ‘no significant difference’ critique loses its edge when compared against this group.

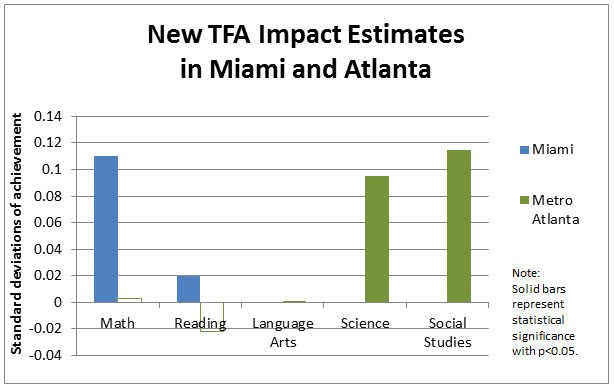

In addition to this Mathematica study, I also point readers to the release of two recent evaluations of TFA that I was involved with—one in Miami, another in metro Atlanta— that both cover similar time periods and saw large expansions of the TFA corps regionally. Moreover, both show positive gains associated with TFAers, though in somewhat surprising and different ways.

The Miami study covers six school years starting in 2008-09, during which time the TFA corps in the region tripled in size. My colleagues at AIR and I analyzed student learning gains based on Florida’s standardized FCAT in reading and math, for tested students in grades four and up. Comparison teachers are similarly experienced teachers in the same schools with similar students.

In Miami, we found students in TFA classrooms scored 0.11 standard deviations higher in math, or roughly equivalent to two months of additional learning. Compared to prior TFA estimates, these math results were in the middle of the range of magnitudes in other studies, suggesting TFA is achieving outcomes consistent with its prior performance.

What’s more is that we also found TFAers significantly out-performed comparison teachers in reading, by about 0.02 standard deviations, or about two weeks of learning. Though I would describe the size of this effect as “modest” at best, it does stand in contrast to prior studies which almost uniformly find no evidence of TFA impact in reading [the one noteworthy exception being the Mathematica evaluation above]. And interestingly enough, we found it was the most recent corps members, those who arrived during the TFA surge, that were most effective in the classroom.

In the second study, Tim Sass and I gathered data from three districts in the Atlanta metropolitan area that have hired significant amounts of TFAers within recent years. The data from the study start in 2005-06 and extend through 2013-14. And like Miami and TFA nationwide, the number of corps members surged starting in 2010, with most unique TFA observations in the sample coming from this period of the surge. We analyze student learning gains both on Georgia’s CRCTs (for grades 4-8); these standardized tests are unique in that they assess students on five subjects (math, reading, language arts, science, and social studies). Again, comparison teachers in this study are similarly experienced teachers in the same schools with similar students.

In these three districts, we found evidence of TFAers significantly outperforming comparison teachers on the CRCTs in science and social studies, with impact estimates of 0.10 and 0.12 standard deviations, respectively. Interestingly, we found no significant differences in performance for any of the other subjects, including math.

Discerning readers may notice that the Atlanta study period includes several years in which widespread test manipulation was going on in Atlanta Public Schools. Sass and I used four separate approaches of filtering out suspect observations, based on erasure analysis performed in 2009 (the peak year of alleged cheating). Our estimates of TFA impacts, however, do not show any sensitivity to the inclusion of these suspect observations.

So, returning to the initial question: are TFAers becoming less effective as the program ages? The Mathematica study is not as definitive on this as many might believe based on headline findings alone. And in addition, the evidence my co-authors and I find in both Miami and metro Atlanta suggests this is not the case. Though none of these studies’ findings comport exactly to expectations of TFA performance based on earlier results pre-dating the surge in TFA numbers, all suggest that TFAers are still contributing something extra to the classrooms they lead.

Disclosure: The John S. and James L. Knight Foundation funded the Miami evaluation, and Teach For America funded the metro Atlanta evaluation (which it procured through a competitive bid process). The evaluations were carried out independently, and Teach For America had no editorial privilege in the presentation of either evaluation’s findings.

Commentary

Losing its luster? New evidence on Teach For America’s impact on student learning

October 27, 2015